You might remember Txty Jukebox, our free to use collaborative music web app that we built on top of the YouTube Data API. We were happy to find that our original version was well received and even got some press from the folks over at makeuseof.com. Well, we’ve finally got a chance to spend some time ( big thanks to our new hire Josh who led the charge ) to make improvements based on the feedback we received and re-branded it under jointdj.com!

The main idea behind our music inspired web application is to create an easy way for groups of people to collaboratively share and listen to song (and video) requests. Any user with a smart phone or computer can enter the event code provided by the event’s host on jointdj.com and start submitting songs to the event’s playlist. The “event” doesn’t always have to be a traditional party either, for example, we’ve been using Joint DJ ourselves in our office as a Pandora or Spotify replacement.

- One big lesson learned from our first go around with Txty Jukebox was that while it’s great when everyone at your event is engaged and the song queue is filled up you can run into awkward silences if the playlist runs of songs when people get distracted, say, doing work or playing an intense game of flip cup. In the past you had to wait until someone queued another song so it became a bit of a chore for the event host. To solve this issue and ensure there will never be a silent moment, we’ve created a new feature that lets the event host to pick a genre of music when they create an event from which a song will be randomly selected and played if a playlist ever runs out. For example, I could create an event with “Top 40 / Pop” as the auto fill genre. If at any point during my event the playlist is empty, all the sudden the latest Chainsmokerz song will magically be queued up!

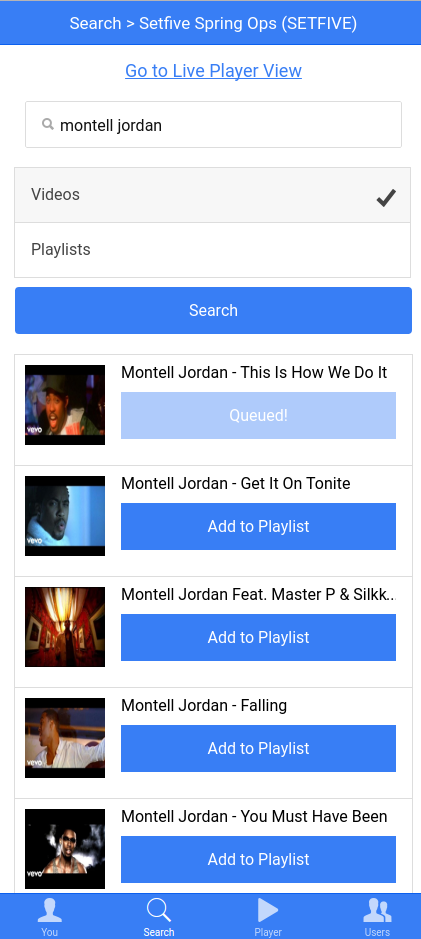

- Another issue we saw in the first version was that sometimes users didn’t get the exact song played that they were searching for. That was because we automatically selected the first result from Youtube regardless of whether it’s the desired result. For Joint DJ, we’ve added the ability for users to use an intuitive browser based UI to easily search for a song and then review the list of music video results from YouTube along with the thumbnail. Once the user finds exactly what song they want to play they can simply select it to add it to the event’s playlist.

- Lastly, we improved the design of the live player view where events users can watch and listen to the music videos associated with the requests. You’ll see “flash” messages when songs are added that show the artist, title and which “DJ” submitted it. Additionally we show the next 4-5 upcoming songs in the queue along with their thumbnails on the left side of the player window. Overall, the new look is more colorful and crisp and should be more impressive to the events users keeping them engaged, having fun, and contributing songs to the event. Below is a screenshot of what the live player view looks like: