Have you ever tried pitching an upgrade to management? Odds are, you probably didn’t find yourself walking away with a blank check. Maybe you’re a network administrator for a real estate company whose boss doesn’t understand why the cheaper network infrastructure isn’t always the best option for scalability; or maybe you need to request an upgrade for an application that takes up a significant amount of your time every day to troubleshoot because it’s incompatible with other operating systems. The conversation goes something like:

You: “Boss, we really need to upgrade [xyz] software package.”

Them: “Why do we need the upgrade? If it ain’t broke, don’t fix it.”

Your: “Well, it’s creating a number of issues for our team. The manufacturer no longer supports the version we use, because it’s been obsolete for 10 years. Whenever an issue comes up we have to come up with a workaround.”

Them: “How much is the upgrade?”

You: “It’ll be $ X for a shared license for all team members.”

Them: “I just don’t think we don’t have the money in the budget for that kind of upgrade. We have a lot more pressing projects requiring capital right now, and I can’t see us justifying that expense to our board.”

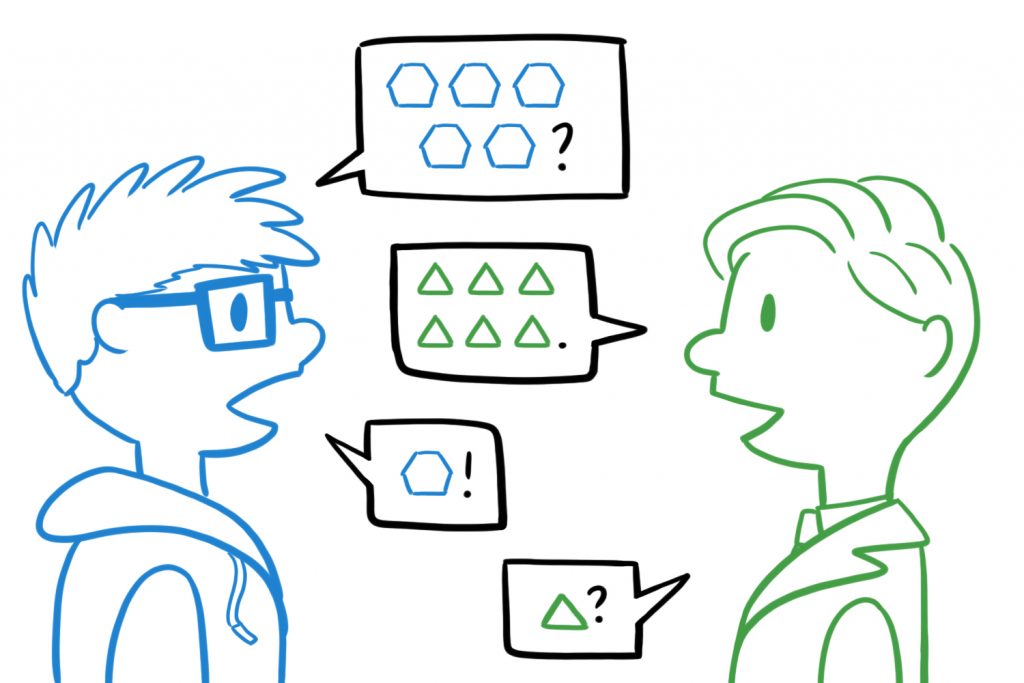

Such a request may not be well received because of difference in perception of the situation –of the cost-reward assessment of the solution. The management team may not speak the same language, so to speak, as the technical support or engineers, so it’s crucial to put the request into terms they will understand and listen to. Better yet, frame that request as an offer.

Here’s three ways here that you can sell to that point, in language even your boss can understand.

1. Security

Technology has never had an obsolescence rate as fast as it is today. Failure to keep up to date is not just a matter of having the best and newest techy toys, though; it can lead to a security breach of personal information (like the Target PIN data breach of 2013), stolen identities and stolen money.

Cyber security expenses are perhaps the hardest sell to make, considering failure to upgrade presents a latent risk rather than an active one. It works until it doesn’t. Earlier in 2020, the stock market witnessed a reactionary boost in security spending in companies like FireEye, after celebrities like Elon Musk’s Twitter accounts were hacked.

As an engineer pitching an upgrade to management, convey the risk of not getting it and the potential fallout.

2. Developer productivity

A software version upgrade can be money in the bank if it saves man hours by making tasks less labor intensive and more efficient. Use terms like “faster,” “leaner,” or the military favorite “force multiplier.” If you’ve got estimates on time allotted to a given project that can be broken down into hourly direct labor savings, that’s always a great selling point.

3. Hiring and Retention

The success of any project depends not just on the tools, but on the people using those tools; to a large degree, the more cutting edge your tools are, the more cutting edge the people in your employ will be. When it comes to hiring and retaining employees, one deciding factor will surely be how modern your operating environment is.

If your team uses obsolete tools, it may even be more difficult to find someone with that skill. If you use Python 2 instead of Python 3, the syntax and many features are quite different, but all modern users are taught the most recent version, so it will present a small challenge to hire someone who’s willing to use (or learn) an obsolete version of that language.

Whatever the case, it’s safe to say meeting the bottom line is among any company’s top priorities, when it comes to spending. The more you can appeal to that end and sell a tangible ROI for the cost of the upgrade, the more likely you are to hear a ‘yes.’

Another tip is to present multiple options: a good, better, and best option with #1 being the most expensive. People are often more likely to choose to do something when it is presented as one of multiple options than alone.