Wow…it’s been awhile!

A couple of weeks ago one of our clients approached us about helping them build an NFT (more on that later). In case you’re not “extremely online” and don’t know what web3 or NFTs are here’s a quick primer.

Crypto and NFTs

As crypto currencies go Bitcoin and Ethereum are the “OG” coins. They’re related projects but ultimately quite different. Ethereum differentiates itself because it enables the Ethereum Virtual Machine which is a global, distributed computing environment which uses Ethereum as payment for executing computation. Executing pieces of code, known as smart contracts, on the EVM is broadly referred to as “web3”. The web3 vision is that it should be possible to transition dozens of financial businesses processes onto the blockchain by using the EVM and smart contracts to encode the rules of the processes. Think stuff like insurance, stock issuance, and even sports books.

Non-fungible tokens (NFTs) are a specific type of smart contract which encode ownership of an asset onto the Ethereum blockchain. What makes NFTs special is that because of the decentralized nature of the blockchain and the EVM its possible to freely trade NFTs and encode rules into their smart contracts. OpenSea is the defacto NFT marketplace where users can trade tokens without the original creators having to create any additional infrastructure. It’s like StubHub…but anyone can sell any NFT on it and anyone can access it.

In addition, because the EVM is Turing complete its possible to enable extremely complex behaviors within the contract of an NFT. In theory, a NFT could represent ownership of any items from tickets to an event or digital collectables. But as it turns out, digital collectibles is where most of the action is today. See for example Bored Ape Yacht Club which has seen some tokens trade for upwards of $24m, Set of “Bored Ape” NFTs sells for $24.4 mln in Sotheby’s online auction

OK, now that we’re all caught up how does one create an NFT? There’s more or less 3 steps:

- Develop a smart contract in Solidity which implements the EIP-721: Non-Fungible Token Standard

- Write some HTML/JS to interact with web3 via MetaMask to call your contract

- Publish the contract to the Ethereum blockchain

- Mint your tokens via the HTML/JS from step 2

Sounds simple enough, but how do you actually make it happen?

Here’s a walk through to launch a NFT in your local test environment.

You can develop the Solidity code in any text editor. But there are some IDE options including an IntelliJ plugin and a larger list here, https://ethereum.org/en/developers/docs/ides/ It’s certainly possible to write a EIP721 Solidity contract from scratch but you’ll end up writing a lot of boilerplate code which will increase the surface area for bugs. A sensible alternative is to use the OpenZeppelin framework which provides you with a suite of battle tested, open source libraries to bootstrap your smart contract. Additionally, OpenZeppelin has a handful of working tutorials so that you can see a smart contract working end to end. Check out OpenSea Creatures.

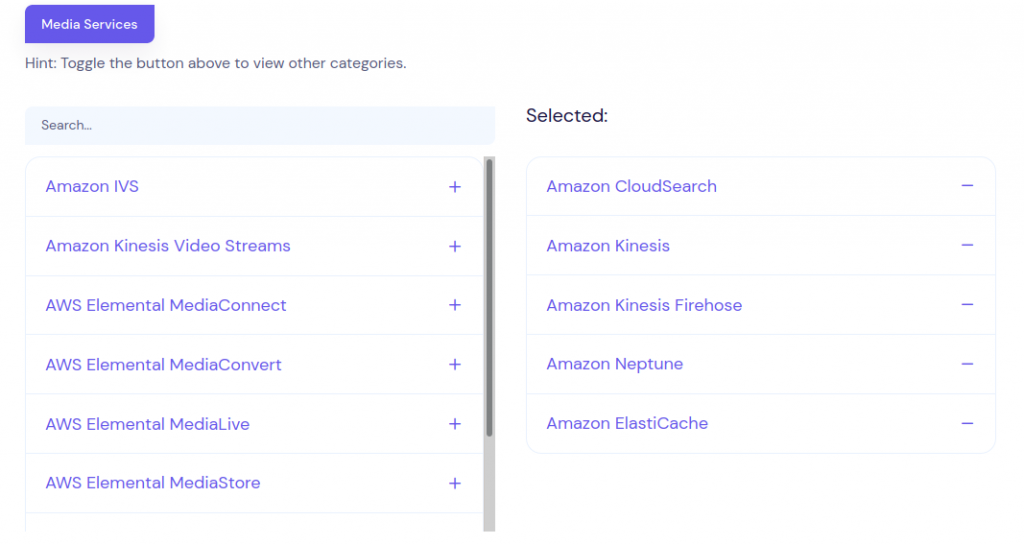

After you have your contract the next piece is interacting with the blockchain to publish your contract. There’s a few tools here that all interact:

- MetaMask – MetaMask is a browser based crypto wallet and web3 provider. It allows you to store Ethereum and interact with contracts on the Ethereum blockchain. You’ll use MetaMask to ultimately mint a token.

- Ganache – Ganache is a tool which allows you to run an Ethereum blockchain on your local machine

- Truffle – Truffle is a suite of tools which makes it easier to interact with the blockchain. You’ll use Truffle to publish your contract and invoke methods within your contract.

Once you have all the tooling setup the steps you’ll need to take are:

- Setup MetaMask and note the mnemonic phrase which your keys were initialized with

- Launch ganache with that mnemonic so that your accounts have some Ethereum

- Use Truffle to publish your contract to your local ganache blockchain

- Use the HTML/JS integration you wrote to invoke MetaMask to call the .mint() function in your contract

Congratulations, you just minted your first NFT in test!

The process for deploying a NFT live is effectively the same except that you’d need to buy some real Ethereum and you’d point Truffle at the live network when you publish your contract.

Hope this was helpful and we’ll add more web3 related content as we continue to build solutions on it!