Note: This post originally appeared at Codeburst

At Setfive Consulting we’ve become big fans of using TypeScript on the frontend and have recently begun adopting it for backend nodejs projects as well. We’ve picked up a couple of tips while setting up these projects that we’re excited to share here!

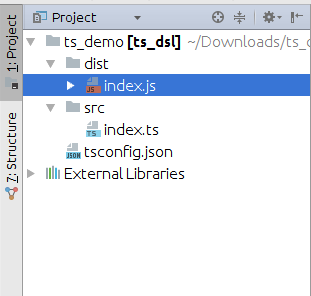

Directory structure

For most nodejs projects any directory layout will work and what you pick will be a matter of personal preference. TypeScript is similar but in order to get the TypeScript compiler to generate JavaScript code into a “dist/” you’ll need to write your code inside a separate directory like “src/” within your project. So you’ll want a layout like the following:

And the compiler will produce JavaScript code in “dist/” from your TypeScript sources in “src/”.

Setup tsconfig.json

As you can see above you’ll want a tsconfig.json file to configure the behavior of the TypeScript compiler. tsconfig.json is a special JSON configuration file that automatically sets various flags for you when you run “tsc” with it present. You can see an exhaustive list of the available options at here. We’ve been using the following as a solid starting point:

From a build perspective this will configure a couple of things for you:

- sourceMaps are enabled so you’ll be able to use node’s DevTools integration to view TypeScript sources alongside your JavaScript

- The compiler will output into a “dist/” folder

- And it’ll compile all of your source files under the “src/” directory

ts-node and nodemon

One of the stumbling blocks to using TypeScript with nodejs is the required compilation step. At face value, it seems like the required workflow would be to edit a TypeScript file, run the compiler, and then run the generated JavaScript on node. Thankfully, ts-node and nodemon make that a reality you wont have to suffer.

ts-node is basically a wrapper around your nodejs installation that will allow you to run TypeScript files directly, without invoking the compiler. Their Readme highlights how it works:

TypeScript Node works by registering the TypeScript compiler for the .ts, .tsx and – when allowJs is enabled – .js extensions. When node.js has a file extension registered (the require.extensions object), it will use the extension internally with module resolution. By default, when an extension is unknown to node.js, it will fallback to handling the file as .js (JavaScript).

So with ts-node you’ll be able to run something like “ts-node src/index.ts” to run your code.

nodemon is the second piece of the puzzle. It’s a node utility that will monitor your source files for changes and automatically restart a node process for your. Perfect for building express or any server apps! We’ve been using the following nodemon.json config file:

And then you’ll be able to just invoke “nodemon” from the root of your project.

Remember “@types/” packages

Since you’re writing nodejs code chances are you’re going to want to leverage JavaScript libraries. TypeScript can interoperate with JavaScript but in order for the compiler to compile without errors you’ll need to provide “.d.ts” typings for the libraries you’re using. For example, trying to compile the following:

import * as _ from "lodash";

console.log(_.range(0, 10).join(","));

Will result in a TypeScript error:

src/index.ts(1,20): error TS7016: Could not find a declaration file for module ‘lodash’. ‘/home/ashish/Downloads/node_modules/lodash/lodash.js’ implicitly has an ‘any’ type.

Try `npm install @types/lodash` if it exists or add a new declaration (.d.ts) file containing `declare module ‘lodash’;`

Even though the output JavaScript file was successfully generated.

The “.d.ts” files are type definitions for a JavaScript library describing the types used, function signatures, and any other type information the compiler might need.

Several popular JavaScript libraries, like moment, have begun shipping the typings files within their main project but many others, like lodash, haven’t. In order to get libraries that don’t have the “.d.ts” files within their main project to work you’ll have to install their respective “@types/” packages. The “@types/” namespace is maintained by DefinitelyTyped but the definitions themselves have been written by contributors. Installing “@types/” packages is easy:

npm install — save @types/lodash

And now the compiler will run without any errors.

Off to the races!

At this point you should have a solid foundation for a TypeScript powered nodejs project. You’ll be able to take advantage of TypeScript’s powerful type system, nodejs’ enormous library ecosystem, and enjoy a easy to use “save and reload” workflow. And as always, I’d love any feedback or other tips!

Thinking about adopting TypeScript at your organization? We’d love to chat.